Exploring the Limitations of GPT-3.5: A Case Study in Logical Disconnects

While advanced language models like GPT-3.5 demonstrate remarkable capabilities in generating human-like text, understanding their limitations is crucial for effective application. This brief exploration highlights a common point where the model’s reasoning falters, leading to a disconnect in its generated output.

When Logic Takes a Detour: Unpacking GPT-3.5’s Shortcomings

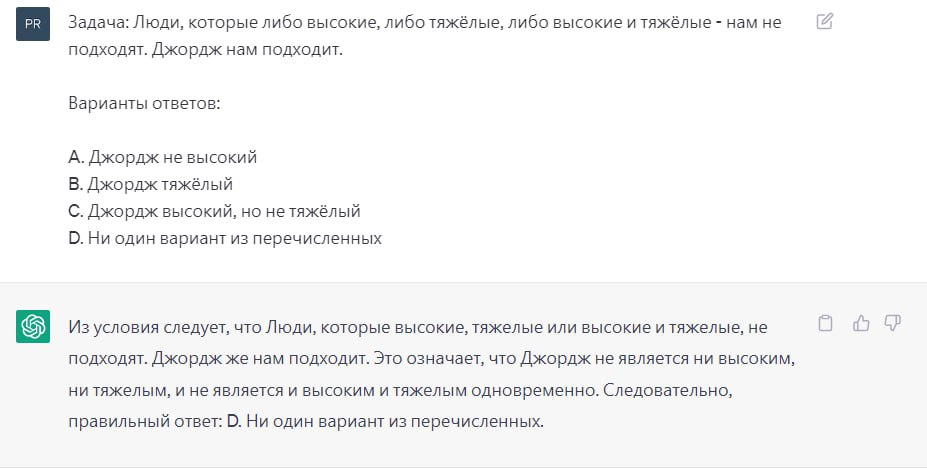

The ability of AI to process and generate information is constantly improving. However, even sophisticated models like GPT-3.5 can exhibit surprising lapses in logical coherence. These moments, often subtle, reveal the underlying architecture and training data’s influence on the model’s decision-making process.

The «Broken Chain» Phenomenon: A Deeper Look

Consider a scenario where GPT-3.5 is asked to explain a complex process. Initially, the explanation might flow seamlessly, drawing upon its vast knowledge base. However, at a critical juncture, the model might introduce an irrelevant detail, jump to an unsupported conclusion, or fail to connect two essential steps. This is what we refer to as the «broken chain» phenomenon – a point where the logical progression of ideas falters.

Common Triggers for Logical Breaks:

- Ambiguous Prompts: Vague or multifaceted questions can confuse the model, leading it to misinterpret the intended line of reasoning.

- Complex Causal Relationships: When a task requires understanding intricate cause-and-effect chains, GPT-3.5 may struggle to maintain accuracy.

- Novel or Abstract Concepts: While trained on extensive data, the model’s ability to extrapolate to entirely new or highly abstract concepts can be limited.

- Context Window Limitations: In very long conversations or documents, the model may lose track of earlier information, impacting its ability to maintain consistent logic.

For instance, when asked to describe the steps involved in photosynthesis and then follow up with the implications for climate change, GPT-3.5 might successfully outline the biological process but then struggle to draw a clear, direct link to specific atmospheric changes without further prompting or a more structured input.

Enhancing AI Reasoning: The Path Forward

Overcoming these limitations involves ongoing research in areas such as:

- Improved Reasoning Architectures: Developing models with more robust logical inference capabilities.

- Curated Training Data: Focusing on datasets that emphasize logical consistency and causal understanding.

- Human-in-the-Loop Systems: Integrating human oversight to guide and correct AI reasoning in real-time.

Understanding these «broken chains» isn’t about dismissing the power of GPT-3.5, but rather about appreciating its current state and guiding its development. By recognizing where the logic can falter, we can design more effective prompts and applications, ultimately harnessing AI’s potential more reliably.

Discover More about how to craft prompts that minimize logical disconnects.